license: mit

IEPile: A Large-Scale Information Extraction Corpus

This is the official repository for IEPile: Unearthing Large-Scale Schema-Based Information Extraction Corpus

IEPile dataset download links: Google Drive | Hugging Face

Please be aware that the data contained in the dataset links provided above has already excluded any part related to the ACE2005 dataset. Should you require access to the unfiltered, complete dataset and have successfully obtained the necessary permissions, please do not hesitate to contact us via email at [email protected] or [email protected]. We will provide the complete dataset resources for your use.

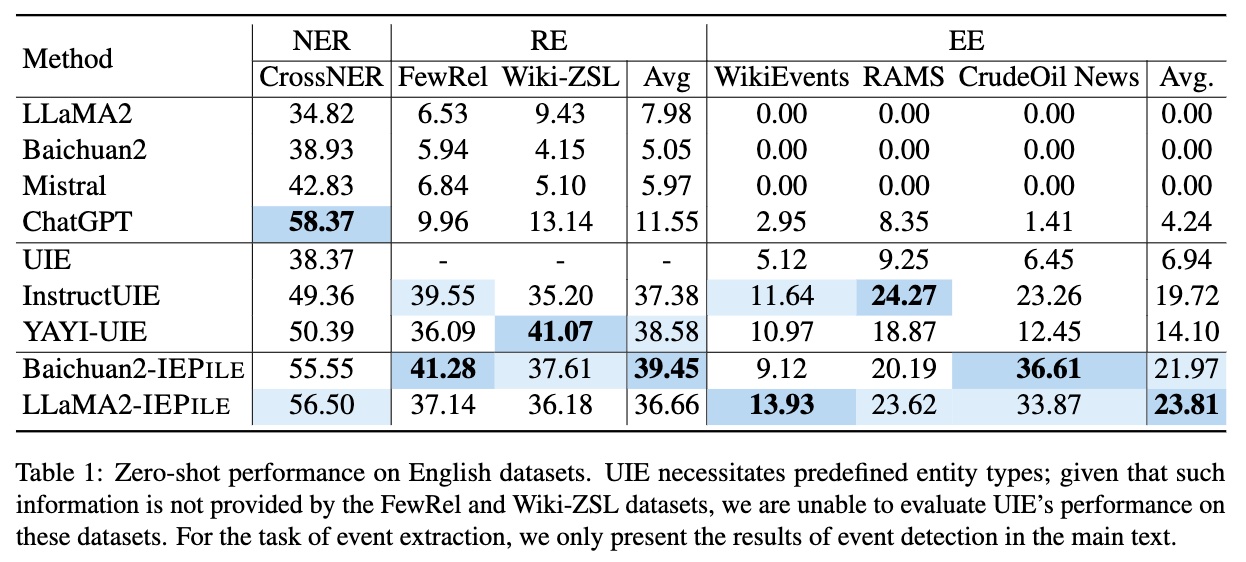

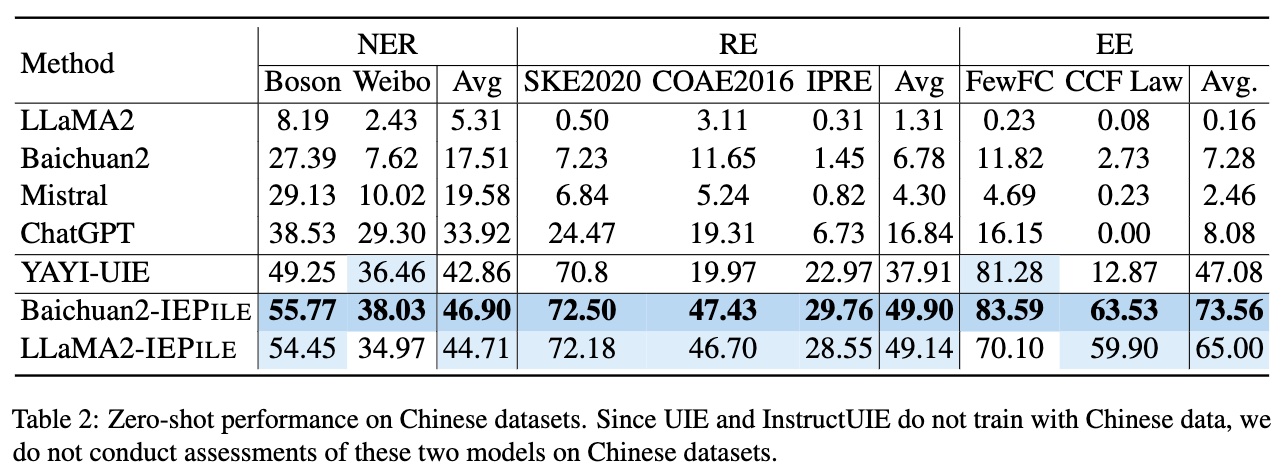

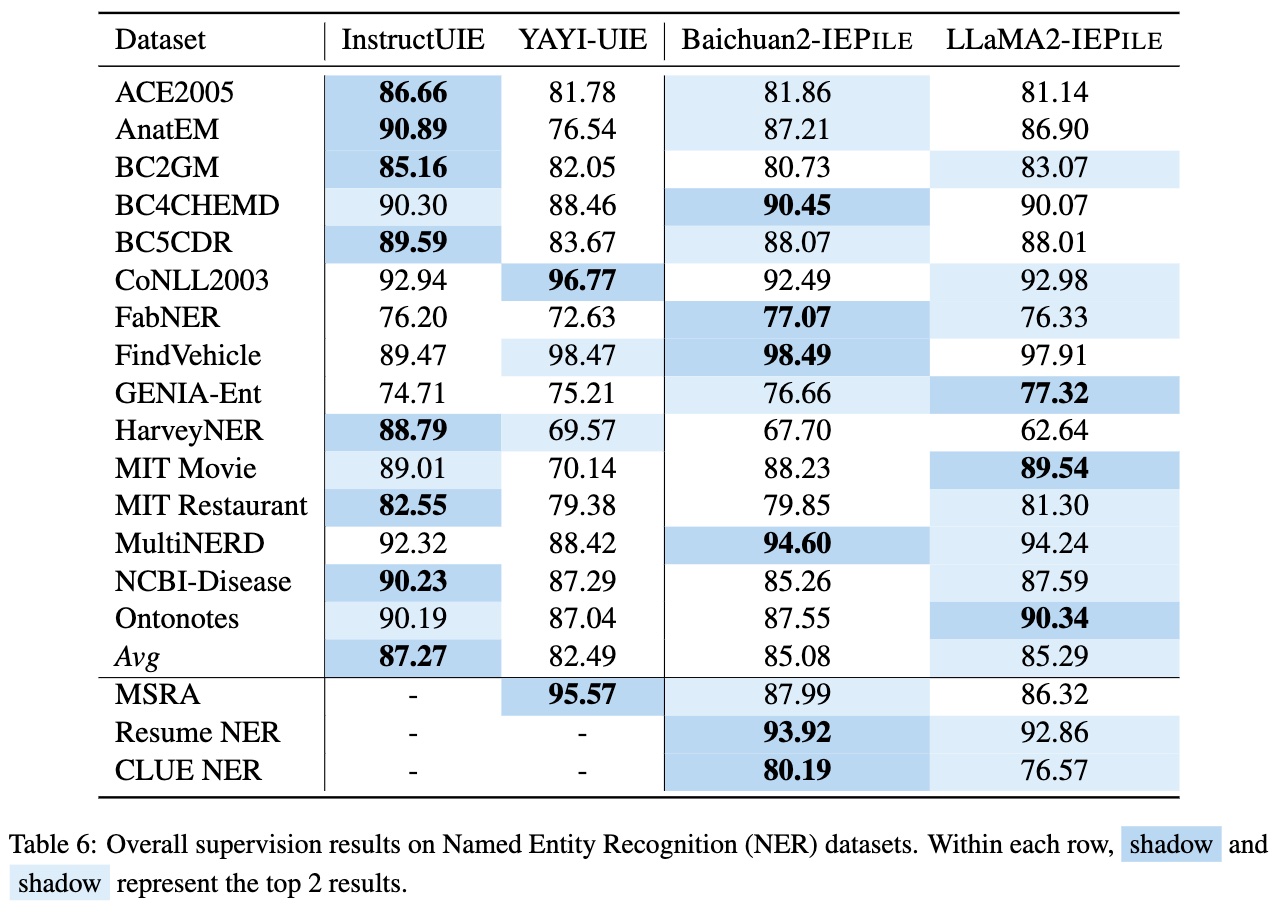

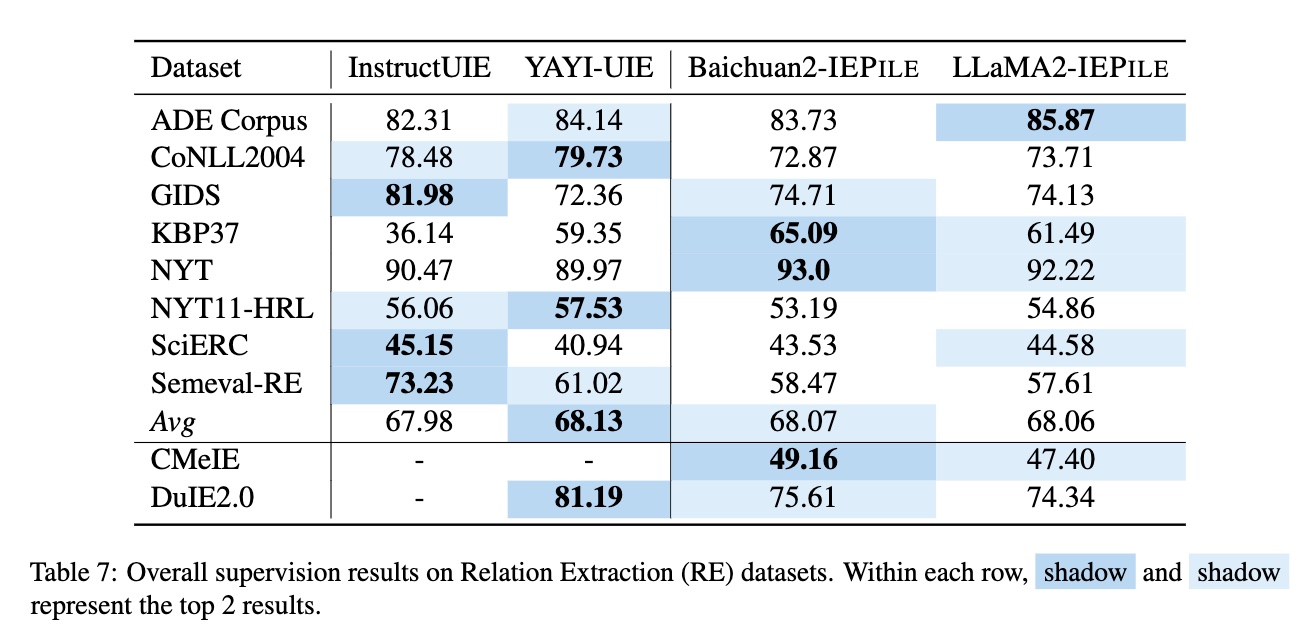

We have meticulously collected and cleaned existing Information Extraction (IE) datasets, integrating a total of 26 English IE datasets and 7 Chinese IE datasets. As shown in Figure 1, these datasets cover multiple domains including general, medical, financial, and others.

In this study, we adopted the proposed "schema-based batched instruction generation method" to successfully create a large-scale, high-quality IE fine-tuning dataset named IEPile, containing approximately 0.32B tokens.

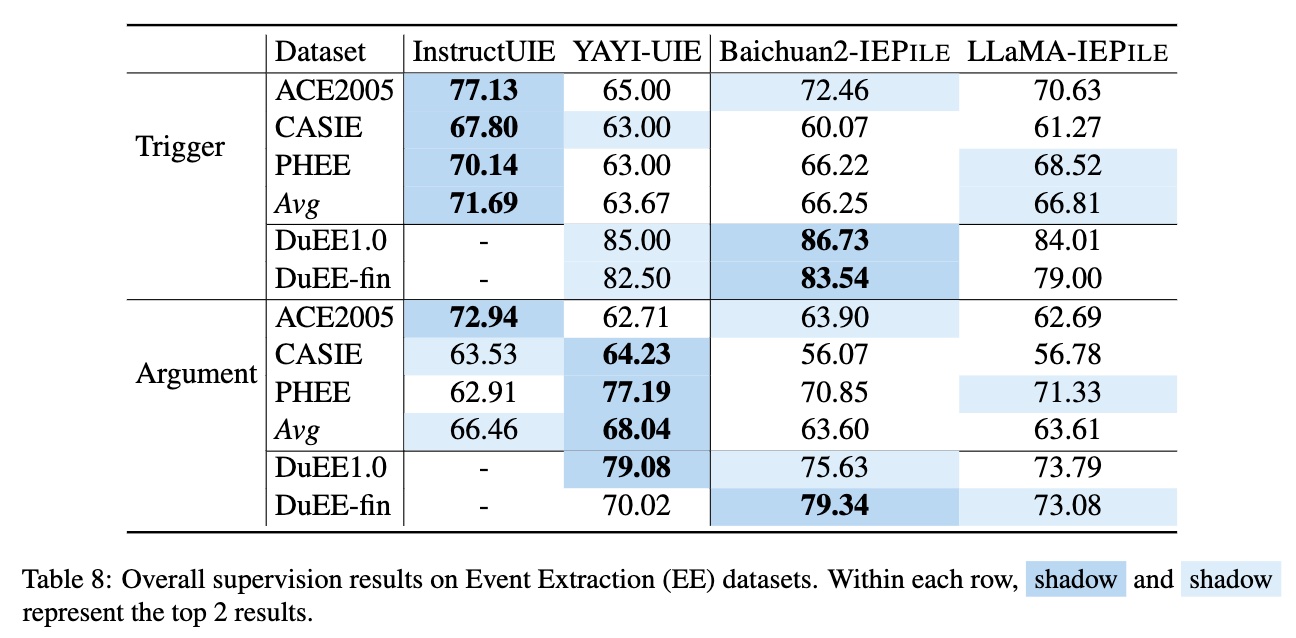

Based on IEPile, we fine-tuned the Baichuan2-13B-Chat and LLaMA2-13B-Chat models using the Lora technique. Experiments have demonstrated that the fine-tuned Baichuan2-IEPile and LLaMA2-IEPile models perform remarkably on fully supervised training sets and have achieved improvements in zero-shot information extraction tasks.

Using IEPile to Train Models

Please visit our official GitHub repository for a comprehensive guide on training and inference with IEPile.

Statement and License

We believe that annotated data contains the wisdom of humanity, and its existence is to promote the benefit of all humankind and help enhance our quality of life. We strongly urge all users not to use our corpus for any actions that may harm national or public security or violate legal regulations. We have done our best to ensure the quality and legality of the data provided. However, we also recognize that despite our efforts, there may still be some unforeseen issues, such as concerns about data protection and risks and problems caused by data misuse. We will not be responsible for these potential problems. For original data that is subject to usage permissions stricter than the CC BY-NC-SA 4.0 agreement, IEPile will adhere to those stricter terms. In all other cases, our operations will be based on the CC BY-NC-SA 4.0 license agreement.

Limitations

From the data perspective, our study primarily focuses on schema-based IE, which limits our ability to generalize to human instructions that do not follow our specific format requirements. Additionally, we do not explore the field of Open Information Extraction (Open IE); however, if we remove schema constraints, our dataset would be suitable for Open IE scenarios. Besides, IEPile is confined to data in English and Chinese, and in the future, we hope to include data in more languages.

From the model perspective, due to computational resource limitations, our research only assessed two models: Baichuan and LLaMA, along with some baseline models. Our dataset can be applied to any other large language models (LLMs), such as Qwen, ChatGLM, Gemma.

Cite

If you use the IEPile or the code, please cite the paper:

Acknowledgements

We are very grateful for the inspiration provided by the MathPile and KnowledgePile projects. Special thanks are due to the builders and maintainers of the following datasets: AnatEM、BC2GM、BC4CHEMD、NCBI-Disease、BC5CDR、HarveyNER、CoNLL2003、GENIA、ACE2005、MIT Restaurant、MIT Movie、FabNER、MultiNERD、Ontonotes、FindVehicle、CrossNER、MSRA NER、Resume NER、CLUE NER、Weibo NER、Boson、ADE Corpus、GIDS、CoNLL2004、SciERC、Semeval-RE、NYT11-HRL、KBP37、NYT、Wiki-ZSL、FewRel、CMeIE、DuIE、COAE2016、IPRE、SKE2020、CASIE、PHEE、CrudeOilNews、RAMS、WikiEvents、DuEE、DuEE-Fin、FewFC、CCF law, and more. These datasets have significantly contributed to the advancement of this research. We are also grateful for the valuable contributions in the field of information extraction made by InstructUIE and YAYI-UIE, both in terms of data and model innovation. Our research results have benefitted from their creativity and hard work as well. Additionally, our heartfelt thanks go to hiyouga/LLaMA-Factory; our fine-tuning code implementation owes much to their work. The assistance provided by these academic resources has been instrumental in the completion of our research, and for this, we are deeply appreciative.