Spaces:

Running

title: README

emoji: 🐢

colorFrom: purple

colorTo: gray

sdk: static

pinned: false

Intel and Hugging Face are building powerful optimization tools to accelerate training and inference with Transformers.

Intel optimizes widely adopted and innovative AI software tools, frameworks, and libraries for Intel® architecture. Whether you are computing locally or deploying AI applications on a massive scale, your organization can achieve peak performance with AI software optimized for Intel® Xeon® Scalable platforms.

Intel’s engineering collaboration with Hugging Face offers state-of-the-art hardware and software acceleration to train, fine-tune and predict with Transformers.

Useful Resources:

- Intel AI + Hugging Face partner page

- Intel AI GitHub

- Developer Resources from Intel and Hugging Face

Get Started

1. Intel Acceleration Libraries

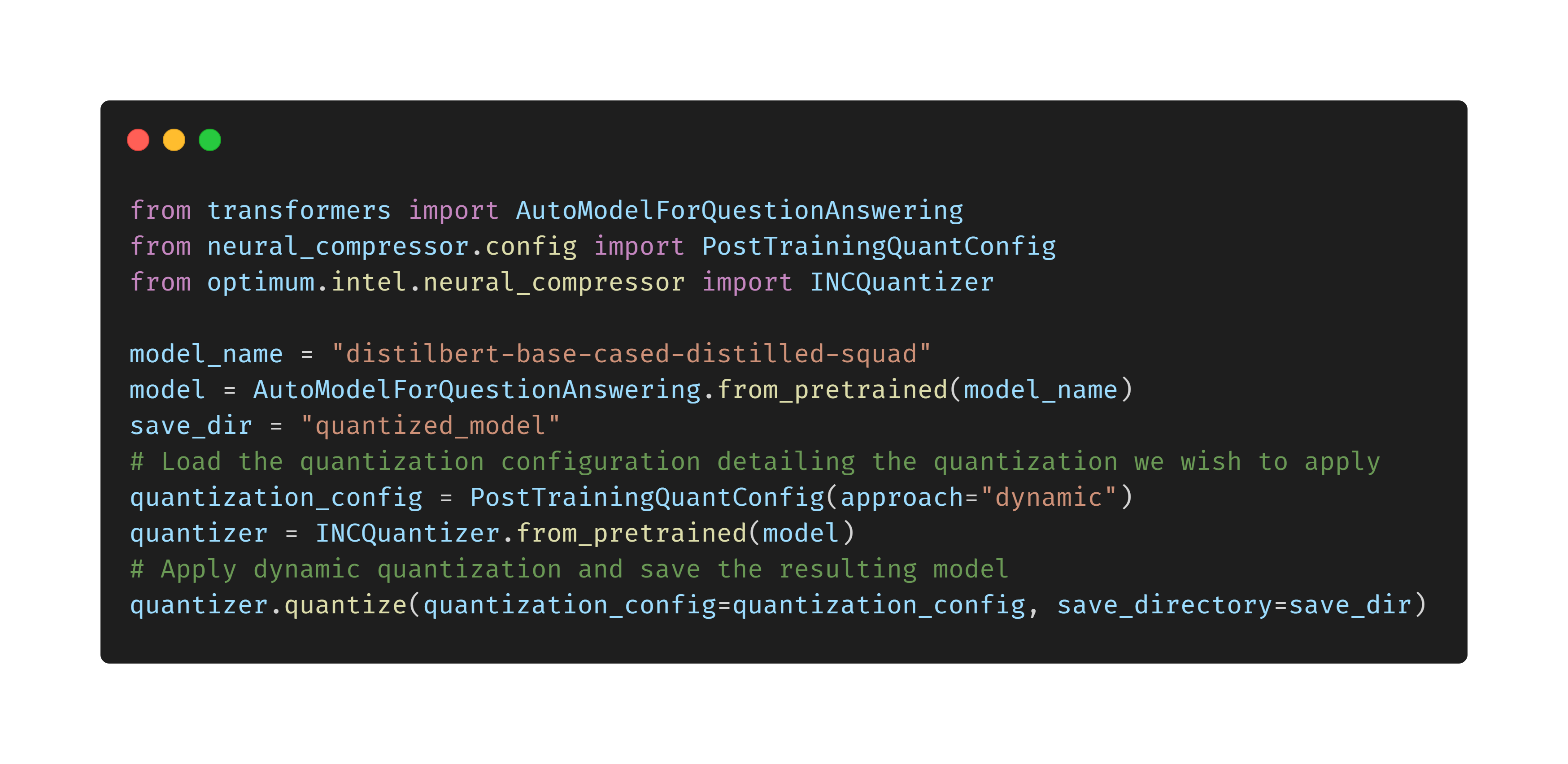

To get started with Intel hardware and software optimizations, download and install the Optimum Intel and Intel® Extension for Transformers libraries. Follow these documents to learn how to install and use these libraries:

The Optimum Intel library provides primarily hardware acceleration, while the Intel® Extension for Transformers is focused more on software accleration. Both should be present to achieve ideal performance and productivity gains in transfer learning and fine-tuning with Hugging Face.

2. Find Your Model

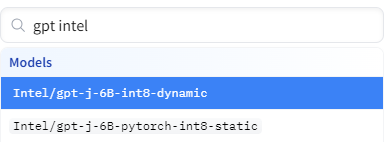

Next, find your desired model (and dataset) by using the search box at the top-left of Hugging Face’s website. Add “intel” to your search to narrow your search to models pretrained by Intel.

3. Read Through the Demo, Dataset, and Quick-Start Commands

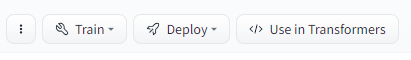

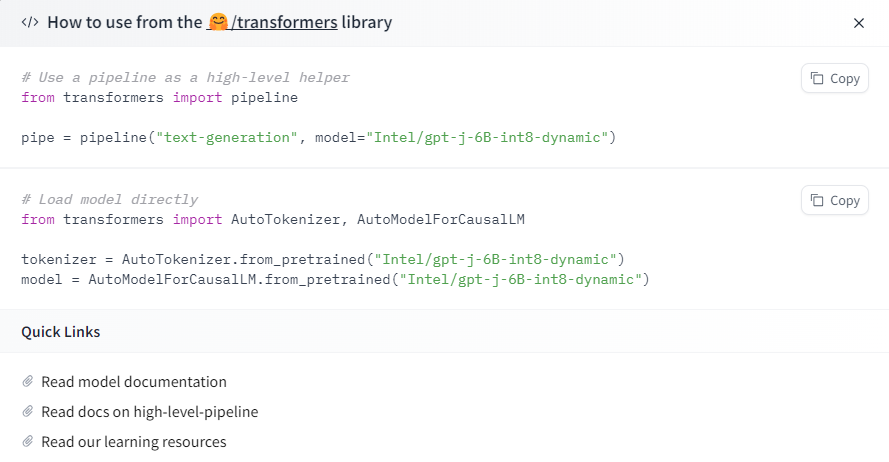

On the model’s page (called a “Model Card”) you will find description and usage information, an embedded inferencing demo, and the associated dataset. In the upper-right of your screen, click “Use in Transformers” for helpful code hints on how to import the model to your own workspace with an established Hugging Face pipeline and tokenizer.