Model description

This model is an attempt to solve the 2025 FrugalAI challenge.

Intended uses & limitations

[More Information Needed]

Training Procedure

Trained using quick a random search

Hyperparameters

Click to expand

| Hyperparameter | Value |

|---|---|

| memory | |

| steps | [('lemmatizer', FunctionTransformer(func=<function lemmatize_X at 0x7f5f1dd2fe50>)), ('tfidf', TfidfVectorizer(max_df=0.95, min_df=2, stop_words=['out', "mustn't", 'were', 'yours', 'ain', 'have', ';', '&', 'has', '+', "needn't", "you've", 'an', 'above', 'wouldn', 'll', 'aren', "should've", 'why', 'under', 'does', 'who', "you'd", 'is', 'itself', 'did', 'through', 'will', "shouldn't", 's', ...], tokenizer=<function tokenize_quote at 0x7f5f1dd73c10>)), ('rf', RandomForestClassifier(max_depth=14, max_features=0.1329083085318658, min_samples_leaf=7, min_samples_split=9, n_estimators=900, n_jobs=-1))] |

| transform_input | |

| verbose | False |

| lemmatizer | FunctionTransformer(func=<function lemmatize_X at 0x7f5f1dd2fe50>) |

| tfidf | TfidfVectorizer(max_df=0.95, min_df=2, stop_words=['out', "mustn't", 'were', 'yours', 'ain', 'have', ';', '&', 'has', '+', "needn't", "you've", 'an', 'above', 'wouldn', 'll', 'aren', "should've", 'why', 'under', 'does', 'who', "you'd", 'is', 'itself', 'did', 'through', 'will', "shouldn't", 's', ...], tokenizer=<function tokenize_quote at 0x7f5f1dd73c10>) |

| rf | RandomForestClassifier(max_depth=14, max_features=0.1329083085318658, min_samples_leaf=7, min_samples_split=9, n_estimators=900, n_jobs=-1) |

| lemmatizer__accept_sparse | False |

| lemmatizer__check_inverse | True |

| lemmatizer__feature_names_out | |

| lemmatizer__func | <function lemmatize_X at 0x7f5f1dd2fe50> |

| lemmatizer__inv_kw_args | |

| lemmatizer__inverse_func | |

| lemmatizer__kw_args | |

| lemmatizer__validate | False |

| tfidf__analyzer | word |

| tfidf__binary | False |

| tfidf__decode_error | strict |

| tfidf__dtype | <class 'numpy.float64'> |

| tfidf__encoding | utf-8 |

| tfidf__input | content |

| tfidf__lowercase | True |

| tfidf__max_df | 0.95 |

| tfidf__max_features | |

| tfidf__min_df | 2 |

| tfidf__ngram_range | (1, 1) |

| tfidf__norm | l2 |

| tfidf__preprocessor | |

| tfidf__smooth_idf | True |

| tfidf__stop_words | ['out', "mustn't", 'were', 'yours', 'ain', 'have', ';', '&', 'has', '+', "needn't", "you've", 'an', 'above', 'wouldn', 'll', 'aren', "should've", 'why', 'under', 'does', 'who', "you'd", 'is', 'itself', 'did', 'through', 'will', "shouldn't", 's', ']', 'should', "mightn't", 'my', 'ourselves', 'the', 'both', 'up', 'but', 'more', 're', 'weren', "you'll", 'over', 'there', 'it', '#', 'that', 'what', 'just', 'mustn', 'not', ':', 'further', 'had', "wouldn't", 'him', "weren't", 'a', 'doing', 'own', '=', 'me', 'mightn', 'ma', 'this', 'theirs', 'was', "shan't", 'can', 'themselves', '.', 'shouldn', 'y', 'about', '>', 'yourselves', 'on', 'once', 'against', 'few', 'you', '*', 'while', 'hadn', 'below', ' |

| tfidf__strip_accents | |

| tfidf__sublinear_tf | False |

| tfidf__token_pattern | (?u)\b\w\w+\b |

| tfidf__tokenizer | <function tokenize_quote at 0x7f5f1dd73c10> |

| tfidf__use_idf | True |

| tfidf__vocabulary | |

| rf__bootstrap | True |

| rf__ccp_alpha | 0.0 |

| rf__class_weight | |

| rf__criterion | gini |

| rf__max_depth | 14 |

| rf__max_features | 0.1329083085318658 |

| rf__max_leaf_nodes | |

| rf__max_samples | |

| rf__min_impurity_decrease | 0.0 |

| rf__min_samples_leaf | 7 |

| rf__min_samples_split | 9 |

| rf__min_weight_fraction_leaf | 0.0 |

| rf__monotonic_cst | |

| rf__n_estimators | 900 |

| rf__n_jobs | -1 |

| rf__oob_score | False |

| rf__random_state | |

| rf__verbose | 0 |

| rf__warm_start | False |

Model Plot

Pipeline(steps=[('lemmatizer',FunctionTransformer(func=<function lemmatize_X at 0x7f5f1dd2fe50>)),('tfidf',TfidfVectorizer(max_df=0.95, min_df=2,stop_words=['out', "mustn't", 'were', 'yours','ain', 'have', ';', '&', 'has','+', "needn't", "you've", 'an','above', 'wouldn', 'll', 'aren',"should've", 'why', 'under','does', 'who', "you'd", 'is','itself', 'did', 'through', 'will',"shouldn't", 's', ...],tokenizer=<function tokenize_quote at 0x7f5f1dd73c10>)),('rf',RandomForestClassifier(max_depth=14,max_features=0.1329083085318658,min_samples_leaf=7, min_samples_split=9,n_estimators=900, n_jobs=-1))])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Pipeline(steps=[('lemmatizer',FunctionTransformer(func=<function lemmatize_X at 0x7f5f1dd2fe50>)),('tfidf',TfidfVectorizer(max_df=0.95, min_df=2,stop_words=['out', "mustn't", 'were', 'yours','ain', 'have', ';', '&', 'has','+', "needn't", "you've", 'an','above', 'wouldn', 'll', 'aren',"should've", 'why', 'under','does', 'who', "you'd", 'is','itself', 'did', 'through', 'will',"shouldn't", 's', ...],tokenizer=<function tokenize_quote at 0x7f5f1dd73c10>)),('rf',RandomForestClassifier(max_depth=14,max_features=0.1329083085318658,min_samples_leaf=7, min_samples_split=9,n_estimators=900, n_jobs=-1))])FunctionTransformer(func=<function lemmatize_X at 0x7f5f1dd2fe50>)

TfidfVectorizer(max_df=0.95, min_df=2,stop_words=['out', "mustn't", 'were', 'yours', 'ain', 'have',';', '&', 'has', '+', "needn't", "you've", 'an','above', 'wouldn', 'll', 'aren', "should've", 'why','under', 'does', 'who', "you'd", 'is', 'itself','did', 'through', 'will', "shouldn't", 's', ...],tokenizer=<function tokenize_quote at 0x7f5f1dd73c10>)

RandomForestClassifier(max_depth=14, max_features=0.1329083085318658,min_samples_leaf=7, min_samples_split=9,n_estimators=900, n_jobs=-1)

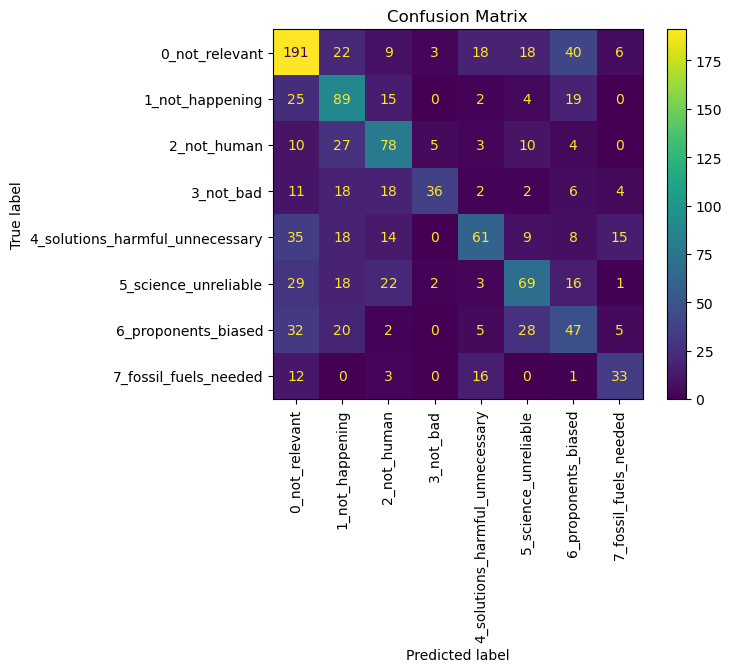

Evaluation Results

| Metric | Value |

|---|---|

| accuracy | 0.495488 |

| f1_score | 0.482301 |

How to Get Started with the Model

[More Information Needed]

Model Card Authors

This model card is written by following authors:

[More Information Needed]

Model Card Contact

You can contact the model card authors through following channels: [More Information Needed]

Citation

Below you can find information related to citation.

BibTeX:

[More Information Needed]

Confusion Matrix

- Downloads last month

- 0

Inference Providers

NEW

This model is not currently available via any of the supported third-party Inference Providers, and

the model is not deployed on the HF Inference API.