LLaMA-2-13b-chat_CounterSpeech_Mu

This LLaMA-2-13b-chat adapter is released as part of the WWW 2025 paper "Contextualized Counterspeech: Strategies for Adaptation, Personalization, and Evaluation" (Cima L. et al., 2025). If you use this model in your work, we kindly ask you to cite our paper:

@misc{cima2024contextualizedcounterspeechstrategiesadaptation,

title={Contextualized Counterspeech: Strategies for Adaptation, Personalization, and Evaluation},

author={Lorenzo Cima and Alessio Miaschi and Amaury Trujillo and Marco Avvenuti and Felice Dell'Orletta and Stefano Cresci},

year={2024},

eprint={2412.07338},

archivePrefix={arXiv},

primaryClass={cs.HC},

url={https://arxiv.org/abs/2412.07338},

}

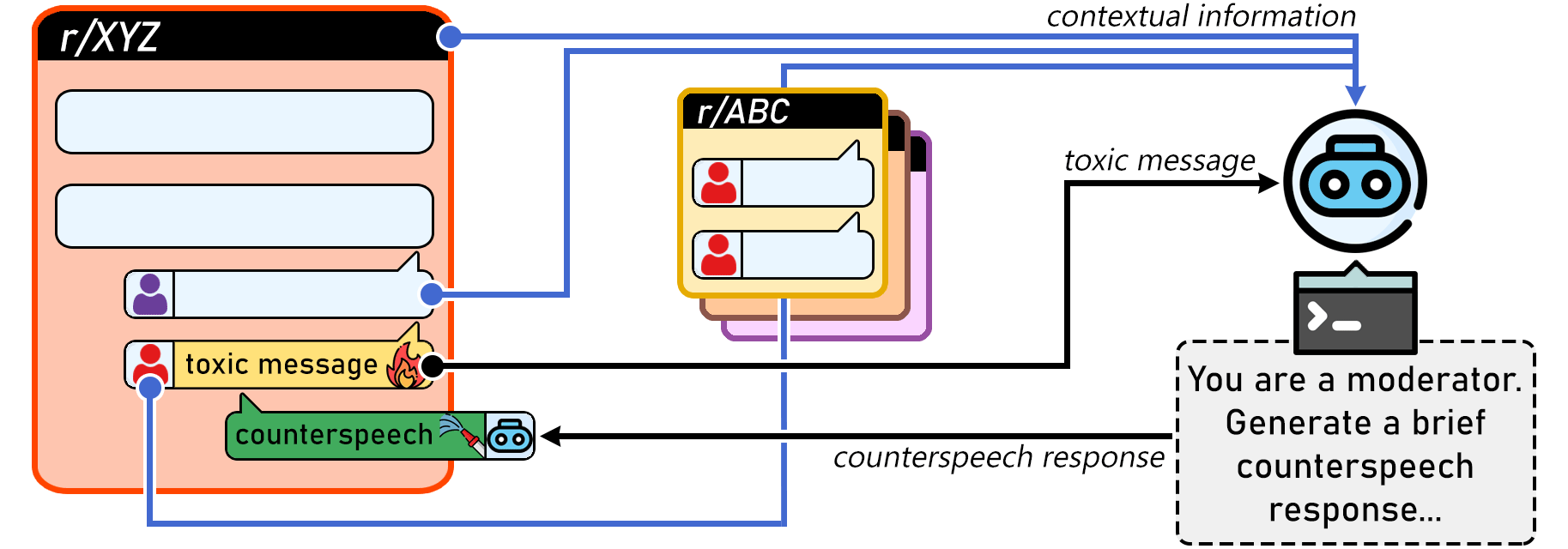

Abstract: AI-generated counterspeech offers a promising and scalable strategy to curb online toxicity through direct replies that promote civil discourse. However, current counterspeech is one-size-fits-all, lacking adaptation to the moderation context and the users involved. We propose and evaluate multiple strategies for generating tailored counterspeech that is adapted to the moderation context and personalized for the moderated user. We instruct a LLaMA2-13B model to generate counterspeech, experimenting with various configurations based on different contextual information and fine-tuning strategies. We identify the configurations that generate persuasive counterspeech through a combination of quantitative indicators and human evaluations collected via a pre-registered mixed-design crowdsourcing experiment. Results show that contextualized counterspeech can significantly outperform state-of-the-art generic counterspeech in adequacy and persuasiveness, without compromising other characteristics. Our findings also reveal a poor correlation between quantitative indicators and human evaluations, suggesting that these methods assess different aspects and highlighting the need for nuanced evaluation methodologies. The effectiveness of contextualized AI-generated counterspeech and the divergence between human and algorithmic evaluations underscore the importance of increased human-AI collaboration in content moderation.

Model Description

The model is based on a LLaMA-2-13b-chat model fine-tuned (with LoRA) on the MultiCONAN dataset (Fanton et al. 2021). This model was also fine-tuned on a sample of comment-reply pairs from five prominent political subreddits.

- Downloads last month

- 0

Model tree for alemiaschi/LLaMA-2-13b-chat_CounterSpeech_MuRe

Base model

meta-llama/Llama-2-13b-chat-hf