Model is still in its testing phase. We don't recommend it for high-end production enviroments, it's only a model for story-generation.

Model trained using LLaMA-Factory by Asadullah Hamzah at XeTute Technologies.

Phantasor V0.1

We introduce Phantasor V0.1, our first sub-1B Parameter GPT. It has been trained ontop of GPT2's smallest version using a little bit over 1.5M input tokens.

Licensed under MIT, feel free to use it in your personal projects, both commercially and privately, Since this is V0.1, we're open to feedback to improve our project(s).

The Chat-Template used is Alpaca. For correct usage, insert your prompt as a system prompt. The model can also be used without any template to continue a sequence of text.

You can find the FP32 version here.

Example =)

"System-Prompt": "A young detective investigates a series of bizarre murders in a small town.",

"Response":

"The story begins on an abandoned farm near the center of rural Indiana County, where local and state authorities have not been able to find witnesses for years.

The village's only witness is her sister who lives there with her grandmother.

She says that she sees people walking around their houses screaming at them – but nobody speaks up.

But someone keeps talking about one old lady whom they suspect may be responsible for the killings after weeks of investigation.

It turns out, it was once another resident named Lisa — whose family members claim she disappeared as early this year when investigators found evidence suggesting foul play.

They believe the woman possessed cocaine before leaving, apparently finding drugs like heroin or morphine during her time living here, under cover of darkness.

Their son went missing several months ago and has even called police alleging he witnessed things happening all over town…… So why hasn't anyone come forward?

Is it because those investigating the case won´t cooperate with law enforcement while searching for clues within the shadows?

Could it perhaps be better if others share the details behind these cases so we can continue documenting what happened through investigative means?"

"System-Prompt": "Given a paragraph, continue the story."

"Input":

"[...]

But they knew that they were leaving with something even more precious than their survival – the bonds they had formed with each other.

They hugged and said their goodbyes, knowing that they had become friends for life."

"Response":

"The sun began to set, painting the sky in hues of orange and pink.

The group of strangers sat around the fire, their faces illuminated by its warm glow.

[...]"

Training

This model was trained on all samples, tokens included in:

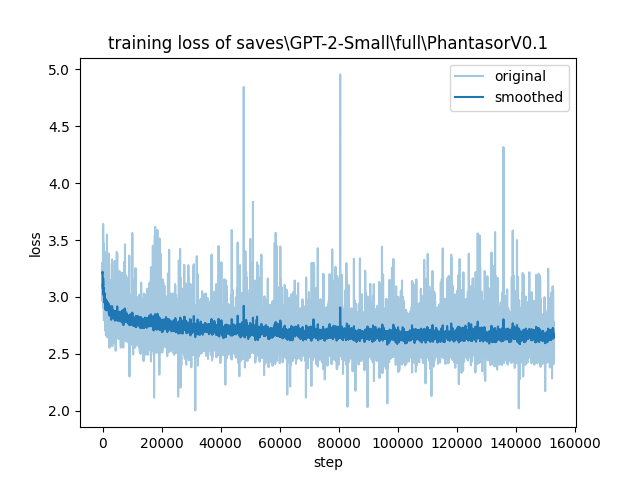

for exactly 3.0 epochs on all model parameters. Following is the loss curve, updated with each training step over all three epochs.

Instead of AdamW, which is often used for large GPTs, we used SGD, which enabled the model to generalize better, which can be seen when using the model on non-dataset prompts.

Instead of AdamW, which is often used for large GPTs, we used SGD, which enabled the model to generalize better, which can be seen when using the model on non-dataset prompts.

Finished Model

- ~137M Parameters, all of which are trainable

- 1024 / 1k input tokens / context length, from which all were used

- A loss ~2.5 on all samples

This is good performance for the V0.1.

Our platforms

Socials

BlueSky | YouTube | HuggingFace 🤗 | Ko-Fi / Financially Support Us

Our Platforms

Have a great day!

- Downloads last month

- 50