Lucas Atkins PRO

Crystalcareai

AI & ML interests

MoE Models & Dataset Curation

Recent Activity

updated

a model

8 days ago

arcee-ai/Virtuoso-Lite

reacted

to

sometimesanotion's

post

with 👍

9 days ago

**Update** Either I had some wrong numbers plugged in to estimate benchmark numbers from comparator, or the benchmark changed. Virtuoso Small v2 at 41.07 average is still very impressive, especially for writing draft copy for business purposes, while Lamarck remains a chatty generalist-reasoning model.

I've felt confident that 14B Qwen finetunes and merges could break the 42.0 average, and Arcee **came close** with https://huggingface.co/arcee-ai/Virtuoso-Small-2. Congratulations to @arcee-ai!

Just two months ago, it was easy to think that 14B had plateaued, that you could have high IFEVAL or high MUSR/MATH/GPQA at 14B, but not both. That barrier is completely shattered. I see a pathway to even better, and Virtuoso Small 2 is a big part of why. Very impressive work. This community would expect no less from Arcee.

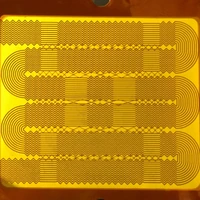

Just look at this graph! Keep in mind, my merges here build on the first Virtuoso Small, and *-DS merges build on DeepSeek R1. There are some impressive merges in the pipe!

reacted

to

sometimesanotion's

post

with 🚀

9 days ago

**Update** Either I had some wrong numbers plugged in to estimate benchmark numbers from comparator, or the benchmark changed. Virtuoso Small v2 at 41.07 average is still very impressive, especially for writing draft copy for business purposes, while Lamarck remains a chatty generalist-reasoning model.

I've felt confident that 14B Qwen finetunes and merges could break the 42.0 average, and Arcee **came close** with https://huggingface.co/arcee-ai/Virtuoso-Small-2. Congratulations to @arcee-ai!

Just two months ago, it was easy to think that 14B had plateaued, that you could have high IFEVAL or high MUSR/MATH/GPQA at 14B, but not both. That barrier is completely shattered. I see a pathway to even better, and Virtuoso Small 2 is a big part of why. Very impressive work. This community would expect no less from Arcee.

Just look at this graph! Keep in mind, my merges here build on the first Virtuoso Small, and *-DS merges build on DeepSeek R1. There are some impressive merges in the pipe!

Organizations

Crystalcareai's activity

Add Apache-2.0 license :3

#1 opened 13 days ago

by

SaisExperiments

SaisExperiments

This cross-architecture distillation, with Phi?

2

#14 opened 26 days ago

by

sometimesanotion

sometimesanotion

Context size

1

#6 opened 2 months ago

by

LoSboccacc

LoSboccacc

Chat Template

2

#5 opened 2 months ago

by

isr431

isr431

Question about model's origin

2

#2 opened 2 months ago

by

sometimesanotion

sometimesanotion

Fix tokenizer.json with file from Qwen/Qwen2.5-14B

1

#3 opened 2 months ago

by

MariusNocturnum

MariusNocturnum

use the original Qwen2.5-14B-Instruct tokenizer

1

#4 opened 2 months ago

by

MaziyarPanahi

MaziyarPanahi

Adding Evaluation Results

#1 opened 2 months ago

by

leaderboard-pr-bot

leaderboard-pr-bot

add Instruct datasets and base model

#1 opened 2 months ago

by

Crystalcareai

Crystalcareai

Chạy trên nhiều GPU cùng 1 lúc để cải thiện hiệu năng

1

#1 opened 2 months ago

by

sieudd

sieudd

Update SuperNova-Medius with a merge with Qwen/Qwen2.5-Coder-14B-Instruct + Further Training 😋

11

#12 opened 3 months ago

by

Joseph717171

Joseph717171

max output tokens?

1

#11 opened 3 months ago

by

sirus

sirus

Update _name to "arcee-ai/SuperNova-Medius"

#5 opened 4 months ago

by

ggbetz

ggbetz

Adding Evaluation Results

#3 opened 4 months ago

by

leaderboard-pr-bot

leaderboard-pr-bot

llama.cpp convert problem report(about `tokenizer.json`)

6

#2 opened 4 months ago

by

DataSoul

DataSoul

2 base models = a nice merge UI on the model page

2

#1 opened 4 months ago

by

victor

victor

Explain these Benchmark Results

2

#2 opened 4 months ago

by

Joseph717171

Joseph717171

Distill Llama-3.2-1B-Instruct from Llama-405B-Instruct to make SuperNova-Pico

1

#14 opened 5 months ago

by

Joseph717171

Joseph717171

Adding Evaluation Results

#15 opened 4 months ago

by

CombinHorizon

CombinHorizon

Why is the tokenizer.json not the same as LLaMa-3.1-8B-Instruct

1

#6 opened 5 months ago

by

Joseph717171

Joseph717171