Spaces:

Running

on

Zero

Running

on

Zero

Upload 12 files

Browse files- .gitattributes +5 -0

- README.md +14 -0

- app.py +98 -0

- example_images/campeones.jpg +3 -0

- example_images/document.jpg +3 -0

- example_images/dogs.jpg +0 -0

- example_images/examples_invoice.png +0 -0

- example_images/examples_weather_events.png +3 -0

- example_images/int.png +3 -0

- example_images/math.jpg +0 -0

- example_images/newyork.jpg +3 -0

- example_images/s2w_example.png +0 -0

- requirements.txt +8 -0

.gitattributes

CHANGED

|

@@ -46,3 +46,8 @@ assets/11111.png filter=lfs diff=lfs merge=lfs -text

|

|

| 46 |

assets/22222.png filter=lfs diff=lfs merge=lfs -text

|

| 47 |

assets/33333.png filter=lfs diff=lfs merge=lfs -text

|

| 48 |

assets/44444.png filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 46 |

assets/22222.png filter=lfs diff=lfs merge=lfs -text

|

| 47 |

assets/33333.png filter=lfs diff=lfs merge=lfs -text

|

| 48 |

assets/44444.png filter=lfs diff=lfs merge=lfs -text

|

| 49 |

+

example_images/campeones.jpg filter=lfs diff=lfs merge=lfs -text

|

| 50 |

+

example_images/document.jpg filter=lfs diff=lfs merge=lfs -text

|

| 51 |

+

example_images/examples_weather_events.png filter=lfs diff=lfs merge=lfs -text

|

| 52 |

+

example_images/int.png filter=lfs diff=lfs merge=lfs -text

|

| 53 |

+

example_images/newyork.jpg filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: Qwen2.5 VL 3B

|

| 3 |

+

emoji: 🔥

|

| 4 |

+

colorFrom: pink

|

| 5 |

+

colorTo: indigo

|

| 6 |

+

sdk: gradio

|

| 7 |

+

sdk_version: 5.13.1

|

| 8 |

+

app_file: app.py

|

| 9 |

+

pinned: true

|

| 10 |

+

license: creativeml-openrail-m

|

| 11 |

+

short_description: Qwen2.5-VL-3B-Instruct

|

| 12 |

+

---

|

| 13 |

+

|

| 14 |

+

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

app.py

ADDED

|

@@ -0,0 +1,98 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from transformers import AutoProcessor, Qwen2_5_VLForConditionalGeneration, TextIteratorStreamer

|

| 3 |

+

from transformers.image_utils import load_image

|

| 4 |

+

from threading import Thread

|

| 5 |

+

import time

|

| 6 |

+

import torch

|

| 7 |

+

import spaces

|

| 8 |

+

|

| 9 |

+

MODEL_ID = "Qwen/Qwen2.5-VL-3B-Instruct"

|

| 10 |

+

processor = AutoProcessor.from_pretrained(MODEL_ID, trust_remote_code=True)

|

| 11 |

+

model = Qwen2_5_VLForConditionalGeneration.from_pretrained(

|

| 12 |

+

MODEL_ID,

|

| 13 |

+

trust_remote_code=True,

|

| 14 |

+

torch_dtype=torch.bfloat16

|

| 15 |

+

).to("cuda").eval()

|

| 16 |

+

|

| 17 |

+

@spaces.GPU

|

| 18 |

+

def model_inference(input_dict, history):

|

| 19 |

+

text = input_dict["text"]

|

| 20 |

+

files = input_dict["files"]

|

| 21 |

+

|

| 22 |

+

# Load images if provided

|

| 23 |

+

if len(files) > 1:

|

| 24 |

+

images = [load_image(image) for image in files]

|

| 25 |

+

elif len(files) == 1:

|

| 26 |

+

images = [load_image(files[0])]

|

| 27 |

+

else:

|

| 28 |

+

images = []

|

| 29 |

+

|

| 30 |

+

# Validate input

|

| 31 |

+

if text == "" and not images:

|

| 32 |

+

gr.Error("Please input a query and optionally image(s).")

|

| 33 |

+

return

|

| 34 |

+

if text == "" and images:

|

| 35 |

+

gr.Error("Please input a text query along with the image(s).")

|

| 36 |

+

return

|

| 37 |

+

|

| 38 |

+

# Prepare messages for the model

|

| 39 |

+

messages = [

|

| 40 |

+

{

|

| 41 |

+

"role": "user",

|

| 42 |

+

"content": [

|

| 43 |

+

*[{"type": "image", "image": image} for image in images],

|

| 44 |

+

{"type": "text", "text": text},

|

| 45 |

+

],

|

| 46 |

+

}

|

| 47 |

+

]

|

| 48 |

+

|

| 49 |

+

# Apply chat template and process inputs

|

| 50 |

+

prompt = processor.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

|

| 51 |

+

inputs = processor(

|

| 52 |

+

text=[prompt],

|

| 53 |

+

images=images if images else None,

|

| 54 |

+

return_tensors="pt",

|

| 55 |

+

padding=True,

|

| 56 |

+

).to("cuda")

|

| 57 |

+

|

| 58 |

+

# Set up streamer for real-time output

|

| 59 |

+

streamer = TextIteratorStreamer(processor, skip_prompt=True, skip_special_tokens=True)

|

| 60 |

+

generation_kwargs = dict(inputs, streamer=streamer, max_new_tokens=1024)

|

| 61 |

+

|

| 62 |

+

# Start generation in a separate thread

|

| 63 |

+

thread = Thread(target=model.generate, kwargs=generation_kwargs)

|

| 64 |

+

thread.start()

|

| 65 |

+

|

| 66 |

+

# Stream the output

|

| 67 |

+

buffer = ""

|

| 68 |

+

yield "Thinking..."

|

| 69 |

+

for new_text in streamer:

|

| 70 |

+

buffer += new_text

|

| 71 |

+

time.sleep(0.01)

|

| 72 |

+

yield buffer

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

# Example inputs

|

| 76 |

+

examples = [

|

| 77 |

+

[{"text": "Explain the movie scene; screen board", "files": ["example_images/int.png"]}],

|

| 78 |

+

[{"text": "Describe the document?", "files": ["example_images/document.jpg"]}],

|

| 79 |

+

[{"text": "Describe this image.", "files": ["example_images/campeones.jpg"]}],

|

| 80 |

+

[{"text": "What does this say?", "files": ["example_images/math.jpg"]}],

|

| 81 |

+

[{"text": "What is this UI about?", "files": ["example_images/s2w_example.png"]}],

|

| 82 |

+

[{"text": "Can you describe this image?", "files": ["example_images/newyork.jpg"]}],

|

| 83 |

+

[{"text": "Can you describe this image?", "files": ["example_images/dogs.jpg"]}],

|

| 84 |

+

[{"text": "Where do the severe droughts happen according to this diagram?", "files": ["example_images/examples_weather_events.png"]}],

|

| 85 |

+

|

| 86 |

+

]

|

| 87 |

+

|

| 88 |

+

demo = gr.ChatInterface(

|

| 89 |

+

fn=model_inference,

|

| 90 |

+

description="# **Qwen2.5-VL-3B-Instruct**",

|

| 91 |

+

examples=examples,

|

| 92 |

+

textbox=gr.MultimodalTextbox(label="Query Input", file_types=["image"], file_count="multiple"),

|

| 93 |

+

stop_btn="Stop Generation",

|

| 94 |

+

multimodal=True,

|

| 95 |

+

cache_examples=False,

|

| 96 |

+

)

|

| 97 |

+

|

| 98 |

+

demo.launch(debug=True)

|

example_images/campeones.jpg

ADDED

|

Git LFS Details

|

example_images/document.jpg

ADDED

|

Git LFS Details

|

example_images/dogs.jpg

ADDED

|

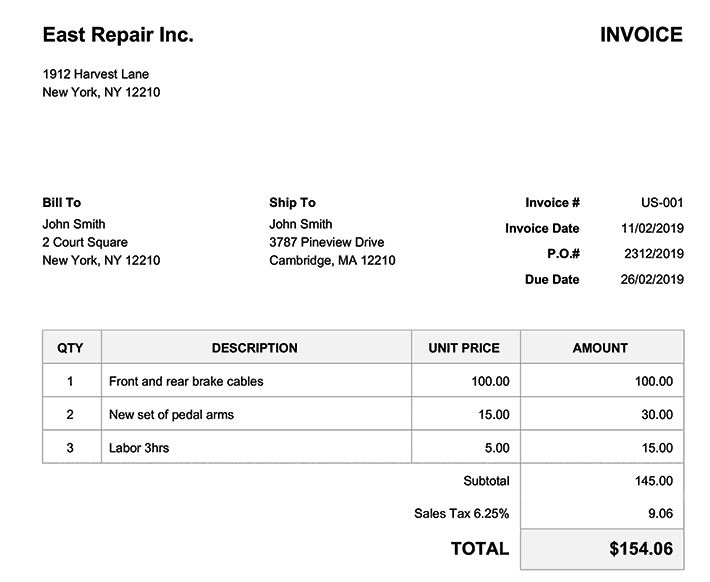

example_images/examples_invoice.png

ADDED

|

example_images/examples_weather_events.png

ADDED

|

Git LFS Details

|

example_images/int.png

ADDED

|

Git LFS Details

|

example_images/math.jpg

ADDED

|

example_images/newyork.jpg

ADDED

|

Git LFS Details

|

example_images/s2w_example.png

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

gradio_client==1.3.0

|

| 2 |

+

qwen-vl-utils==0.0.2

|

| 3 |

+

transformers-stream-generator==0.0.4

|

| 4 |

+

torch==2.4.0

|

| 5 |

+

torchvision==0.19.0

|

| 6 |

+

git+https://github.com/huggingface/transformers.git

|

| 7 |

+

accelerate

|

| 8 |

+

av

|