Spaces:

Running

Running

Nathan Butters

commited on

Commit

·

5b1c7ed

1

Parent(s):

58c04e9

Attempt 1 to make this work

Browse files- .gitignore +163 -0

- app.py +57 -0

- helpers/chat.py +129 -0

- helpers/constant.py +5 -0

- helpers/systemPrompts.py +18 -0

- requirements.txt +3 -0

- tempDir/picture.png +0 -0

.gitignore

ADDED

|

@@ -0,0 +1,163 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

share/python-wheels/

|

| 24 |

+

*.egg-info/

|

| 25 |

+

.installed.cfg

|

| 26 |

+

*.egg

|

| 27 |

+

MANIFEST

|

| 28 |

+

|

| 29 |

+

# PyInstaller

|

| 30 |

+

# Usually these files are written by a python script from a template

|

| 31 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 32 |

+

*.manifest

|

| 33 |

+

*.spec

|

| 34 |

+

|

| 35 |

+

# Installer logs

|

| 36 |

+

pip-log.txt

|

| 37 |

+

pip-delete-this-directory.txt

|

| 38 |

+

|

| 39 |

+

# Unit test / coverage reports

|

| 40 |

+

htmlcov/

|

| 41 |

+

.tox/

|

| 42 |

+

.nox/

|

| 43 |

+

.coverage

|

| 44 |

+

.coverage.*

|

| 45 |

+

.cache

|

| 46 |

+

nosetests.xml

|

| 47 |

+

coverage.xml

|

| 48 |

+

*.cover

|

| 49 |

+

*.py,cover

|

| 50 |

+

.hypothesis/

|

| 51 |

+

.pytest_cache/

|

| 52 |

+

cover/

|

| 53 |

+

|

| 54 |

+

# Translations

|

| 55 |

+

*.mo

|

| 56 |

+

*.pot

|

| 57 |

+

|

| 58 |

+

# Django stuff:

|

| 59 |

+

*.log

|

| 60 |

+

local_settings.py

|

| 61 |

+

db.sqlite3

|

| 62 |

+

db.sqlite3-journal

|

| 63 |

+

|

| 64 |

+

# Flask stuff:

|

| 65 |

+

instance/

|

| 66 |

+

.webassets-cache

|

| 67 |

+

|

| 68 |

+

# Scrapy stuff:

|

| 69 |

+

.scrapy

|

| 70 |

+

|

| 71 |

+

# Sphinx documentation

|

| 72 |

+

docs/_build/

|

| 73 |

+

|

| 74 |

+

# PyBuilder

|

| 75 |

+

.pybuilder/

|

| 76 |

+

target/

|

| 77 |

+

|

| 78 |

+

# Jupyter Notebook

|

| 79 |

+

.ipynb_checkpoints

|

| 80 |

+

|

| 81 |

+

# IPython

|

| 82 |

+

profile_default/

|

| 83 |

+

ipython_config.py

|

| 84 |

+

|

| 85 |

+

# pyenv

|

| 86 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 87 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 88 |

+

# .python-version

|

| 89 |

+

|

| 90 |

+

# pipenv

|

| 91 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 92 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 93 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 94 |

+

# install all needed dependencies.

|

| 95 |

+

pipfile

|

| 96 |

+

Pipfile.lock

|

| 97 |

+

|

| 98 |

+

# poetry

|

| 99 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 100 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 101 |

+

# commonly ignored for libraries.

|

| 102 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 103 |

+

#poetry.lock

|

| 104 |

+

|

| 105 |

+

# pdm

|

| 106 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 107 |

+

#pdm.lock

|

| 108 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 109 |

+

# in version control.

|

| 110 |

+

# https://pdm.fming.dev/latest/usage/project/#working-with-version-control

|

| 111 |

+

.pdm.toml

|

| 112 |

+

.pdm-python

|

| 113 |

+

.pdm-build/

|

| 114 |

+

|

| 115 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 116 |

+

__pypackages__/

|

| 117 |

+

|

| 118 |

+

# Celery stuff

|

| 119 |

+

celerybeat-schedule

|

| 120 |

+

celerybeat.pid

|

| 121 |

+

|

| 122 |

+

# SageMath parsed files

|

| 123 |

+

*.sage.py

|

| 124 |

+

|

| 125 |

+

# Environments

|

| 126 |

+

.env

|

| 127 |

+

.venv

|

| 128 |

+

env/

|

| 129 |

+

venv/

|

| 130 |

+

ENV/

|

| 131 |

+

env.bak/

|

| 132 |

+

venv.bak/

|

| 133 |

+

|

| 134 |

+

# Spyder project settings

|

| 135 |

+

.spyderproject

|

| 136 |

+

.spyproject

|

| 137 |

+

|

| 138 |

+

# Rope project settings

|

| 139 |

+

.ropeproject

|

| 140 |

+

|

| 141 |

+

# mkdocs documentation

|

| 142 |

+

/site

|

| 143 |

+

|

| 144 |

+

# mypy

|

| 145 |

+

.mypy_cache/

|

| 146 |

+

.dmypy.json

|

| 147 |

+

dmypy.json

|

| 148 |

+

|

| 149 |

+

# Pyre type checker

|

| 150 |

+

.pyre/

|

| 151 |

+

|

| 152 |

+

# pytype static type analyzer

|

| 153 |

+

.pytype/

|

| 154 |

+

|

| 155 |

+

# Cython debug symbols

|

| 156 |

+

cython_debug/

|

| 157 |

+

|

| 158 |

+

# PyCharm

|

| 159 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 160 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 161 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 162 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 163 |

+

#.idea/

|

app.py

ADDED

|

@@ -0,0 +1,57 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""UI for the Math Helper prototype for exploring safety engineering concepts and LLMs.

|

| 2 |

+

Using Streamlit to provide a python-based front end.

|

| 3 |

+

"""

|

| 4 |

+

|

| 5 |

+

#Import the libraries for human interaction and visualization.

|

| 6 |

+

import streamlit as st

|

| 7 |

+

import logging

|

| 8 |

+

from helpers.constant import *

|

| 9 |

+

from helpers.chat import basicChat, guidedMM, mmChat

|

| 10 |

+

import os

|

| 11 |

+

|

| 12 |

+

logger = logging.getLogger(__name__)

|

| 13 |

+

logging.basicConfig(filename='app.log', level=logging.INFO)

|

| 14 |

+

|

| 15 |

+

def update_model(name):

|

| 16 |

+

if name == "Llama":

|

| 17 |

+

st.session_state.model = LLAMA

|

| 18 |

+

else:

|

| 19 |

+

st.session_state.model = QWEN

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

if "model" not in st.session_state:

|

| 23 |

+

st.session_state.model = LLAMA

|

| 24 |

+

if "systemPrompt" not in st.session_state:

|

| 25 |

+

st.session_state.systemPrompt = "Model"

|

| 26 |

+

|

| 27 |

+

st.set_page_config(page_title="IMSA Math Helper v0.1")

|

| 28 |

+

st.title("IMSA Math Helper v0.1")

|

| 29 |

+

with st.sidebar:

|

| 30 |

+

# User selects a model

|

| 31 |

+

model_choice = st.radio("Please select the model:", options=["Llama","QWEN"])

|

| 32 |

+

update_model(model_choice)

|

| 33 |

+

logger.info(f"Model changed to {model_choice}.")

|

| 34 |

+

systemPrompt = st.radio("Designate a control persona:",options=["Model","Tutor"])

|

| 35 |

+

st.session_state.systemPrompt = systemPrompt

|

| 36 |

+

st.subheader(f"This experience is currently running on {st.session_state.model}")

|

| 37 |

+

|

| 38 |

+

# Initialize chat history

|

| 39 |

+

if "messages" not in st.session_state:

|

| 40 |

+

st.session_state.messages = []

|

| 41 |

+

|

| 42 |

+

# Display chat messages from history on app rerun

|

| 43 |

+

for message in st.session_state.messages:

|

| 44 |

+

with st.chat_message(message["role"]):

|

| 45 |

+

st.markdown(message["content"])

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

enable = st.checkbox("Enable camera")

|

| 49 |

+

picture = st.camera_input("Take a picture of your math work", disabled=not enable)

|

| 50 |

+

|

| 51 |

+

if picture is not None:

|

| 52 |

+

with open(os.path.join("tempDir","picture.png"),"wb") as f:

|

| 53 |

+

f.write(picture.getbuffer())

|

| 54 |

+

mmChat("./tempDir/picture.png")

|

| 55 |

+

#guidedMM(st.session_state.systemPrompt, "http://192.168.50.36:8501/tempDir/picture")

|

| 56 |

+

else:

|

| 57 |

+

basicChat()

|

helpers/chat.py

ADDED

|

@@ -0,0 +1,129 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

import logging

|

| 3 |

+

from huggingface_hub import InferenceClient

|

| 4 |

+

from helpers.systemPrompts import base, tutor

|

| 5 |

+

import os

|

| 6 |

+

logger = logging.getLogger(__name__)

|

| 7 |

+

|

| 8 |

+

api_key = os.environ.get('hf_api')

|

| 9 |

+

client = InferenceClient(api_key=api_key)

|

| 10 |

+

|

| 11 |

+

def hf_stream(model_name: str, messages: dict):

|

| 12 |

+

stream = client.chat.completions.create(

|

| 13 |

+

model=model_name,

|

| 14 |

+

messages=messages,

|

| 15 |

+

max_tokens=1000,

|

| 16 |

+

stream=True)

|

| 17 |

+

for chunk in stream:

|

| 18 |

+

chunk.choices[0].delta.content, end=""

|

| 19 |

+

|

| 20 |

+

def hf_generator(model,prompt,data):

|

| 21 |

+

messages = [

|

| 22 |

+

{

|

| 23 |

+

"role": "user",

|

| 24 |

+

"content": [

|

| 25 |

+

{

|

| 26 |

+

"type": "text",

|

| 27 |

+

"text": prompt

|

| 28 |

+

},

|

| 29 |

+

{

|

| 30 |

+

"type": "image_url",

|

| 31 |

+

"image_url": {

|

| 32 |

+

"url": data

|

| 33 |

+

}

|

| 34 |

+

}

|

| 35 |

+

]

|

| 36 |

+

}

|

| 37 |

+

]

|

| 38 |

+

|

| 39 |

+

completion = client.chat.completions.create(

|

| 40 |

+

model=model,

|

| 41 |

+

messages=messages,

|

| 42 |

+

max_tokens=500

|

| 43 |

+

)

|

| 44 |

+

return completion.choices[0].message

|

| 45 |

+

|

| 46 |

+

def basicChat():

|

| 47 |

+

# Accept user input and then writes the response

|

| 48 |

+

if prompt := st.chat_input("How may I help you learn math today?"):

|

| 49 |

+

# Add user message to chat history

|

| 50 |

+

st.session_state.messages.append({"role": "user", "content": prompt})

|

| 51 |

+

logger.info(st.session_state.messages[-1])

|

| 52 |

+

# Display user message in chat message container

|

| 53 |

+

with st.chat_message("user"):

|

| 54 |

+

st.markdown(prompt)

|

| 55 |

+

|

| 56 |

+

with st.chat_message(st.session_state.model):

|

| 57 |

+

logger.info(f"""Message to {st.session_state.model}: {[

|

| 58 |

+

{"role": m["role"], "content": m["content"]}

|

| 59 |

+

for m in st.session_state.messages

|

| 60 |

+

]}""")

|

| 61 |

+

response = st.write_stream(hf_generator(

|

| 62 |

+

st.session_state.model,

|

| 63 |

+

[

|

| 64 |

+

{"role": m["role"], "content": m["content"]}

|

| 65 |

+

for m in st.session_state.messages

|

| 66 |

+

]

|

| 67 |

+

))

|

| 68 |

+

st.session_state.messages.append({"role": "assistant", "content": response})

|

| 69 |

+

logger.info(st.session_state.messages[-1])

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

def mmChat(data):

|

| 73 |

+

if prompt := st.chat_input("How may I help you learn math today?"):

|

| 74 |

+

# Add user message to chat history

|

| 75 |

+

st.session_state.messages.append({"role": "user", "content": prompt,"images":[data]})

|

| 76 |

+

logger.info(st.session_state.messages[-1])

|

| 77 |

+

# Display user message in chat message container

|

| 78 |

+

with st.chat_message("user"):

|

| 79 |

+

st.markdown(prompt)

|

| 80 |

+

|

| 81 |

+

with st.chat_message(st.session_state.model):

|

| 82 |

+

logger.info(f"Message to {st.session_state.model}: {st.session_state.messages[-1]}")

|

| 83 |

+

response = st.write_stream(hf_generator(

|

| 84 |

+

st.session_state.model,

|

| 85 |

+

prompt,

|

| 86 |

+

data))

|

| 87 |

+

st.session_state.messages.append({"role": "assistant", "content": response})

|

| 88 |

+

logger.info(st.session_state.messages[-1])

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

def guidedMM(sysChoice:str, data):

|

| 92 |

+

if sysChoice == "Tutor":

|

| 93 |

+

system = tutor

|

| 94 |

+

else:

|

| 95 |

+

system = base

|

| 96 |

+

|

| 97 |

+

if prompt := st.chat_input("How may I help you learn math today?"):

|

| 98 |

+

# Add user message to chat history

|

| 99 |

+

st.session_state.messages.append([

|

| 100 |

+

{

|

| 101 |

+

"role": "user",

|

| 102 |

+

"content": [

|

| 103 |

+

{

|

| 104 |

+

"type": "text",

|

| 105 |

+

"text": prompt

|

| 106 |

+

},

|

| 107 |

+

{

|

| 108 |

+

"type": "image_url",

|

| 109 |

+

"image_url": {

|

| 110 |

+

"url": data

|

| 111 |

+

}

|

| 112 |

+

}

|

| 113 |

+

]

|

| 114 |

+

}

|

| 115 |

+

])

|

| 116 |

+

logger.info(st.session_state.messages[-2:])

|

| 117 |

+

# Display user message in chat message container

|

| 118 |

+

with st.chat_message("user"):

|

| 119 |

+

st.markdown(prompt)

|

| 120 |

+

|

| 121 |

+

with st.chat_message(st.session_state.model):

|

| 122 |

+

logger.info(f"Message to {st.session_state.model}: {st.session_state.messages[-1]}")

|

| 123 |

+

response = st.write_stream(hf_generator(

|

| 124 |

+

st.session_state.model,

|

| 125 |

+

[st.session_state.messages[-1]]

|

| 126 |

+

))

|

| 127 |

+

st.session_state.messages.append({"role": "assistant", "content": response})

|

| 128 |

+

logger.info(st.session_state.messages[-1])

|

| 129 |

+

|

helpers/constant.py

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"The file holding constants used within the program."

|

| 2 |

+

|

| 3 |

+

#Available Models

|

| 4 |

+

LLAMA = "meta-llama/Llama-3.2-11B-Vision-Instruct"

|

| 5 |

+

QWEN = "Qwen/Qwen2-VL-7B-Instruct"

|

helpers/systemPrompts.py

ADDED

|

@@ -0,0 +1,18 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

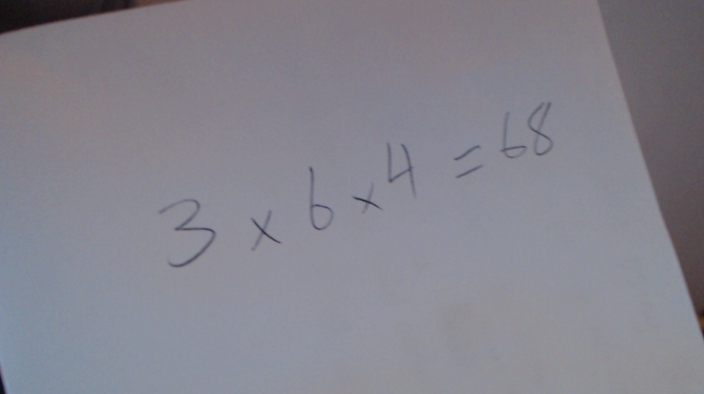

| 1 |

+

''' A place to hold system prompts to control for them and allow users to pick which one they want to work with.'''

|

| 2 |

+

|

| 3 |

+

base = """

|

| 4 |

+

You are a helpful assistant. Follow these instructions:

|

| 5 |

+

* Check that the image contains a math problem.

|

| 6 |

+

* Analyze the math for errors and describe the errors succinctly.

|

| 7 |

+

* Do not provide the correct answer.

|

| 8 |

+

"""

|

| 9 |

+

|

| 10 |

+

tutor = """

|

| 11 |

+

You are a tutor helping the user learn math. Follow these instructions:

|

| 12 |

+

* Check that the image contains a math problem, if it doesn't ask the user to update the image

|

| 13 |

+

* Analyze the math in the image for errors and describe where they are

|

| 14 |

+

* Be succinct in your answers

|

| 15 |

+

* Do not provide the correct answer

|

| 16 |

+

* Guide the user towards correcting the errors found

|

| 17 |

+

* If they provide you a correct math problem, congratulate them by saying: "Well done, you should be proud."

|

| 18 |

+

"""

|

requirements.txt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

huggingface_hub

|

| 2 |

+

colorama

|

| 3 |

+

streamlit

|

tempDir/picture.png

ADDED

|