Update README.md

Browse files

README.md

CHANGED

|

@@ -22,7 +22,7 @@ The model intended to be used for encoding sentences or short paragraphs. Given

|

|

| 22 |

The model was trained on a random collection of **English** sentences from Wikipedia: [Training data file](https://huggingface.co/datasets/princeton-nlp/datasets-for-simcse/resolve/main/wiki1m_for_simcse.txt)

|

| 23 |

|

| 24 |

# Model Usage

|

| 25 |

-

|

| 26 |

|

| 27 |

```python

|

| 28 |

from transformers import AutoTokenizer, AutoModel

|

|

@@ -69,7 +69,7 @@ cos_sim = sim(embeddings.unsqueeze(1),

|

|

| 69 |

print(f"Distance: {cos_sim[0,1].detach().item()}")

|

| 70 |

```

|

| 71 |

|

| 72 |

-

|

| 73 |

|

| 74 |

```python

|

| 75 |

from transformers import AutoTokenizer, AutoModel

|

|

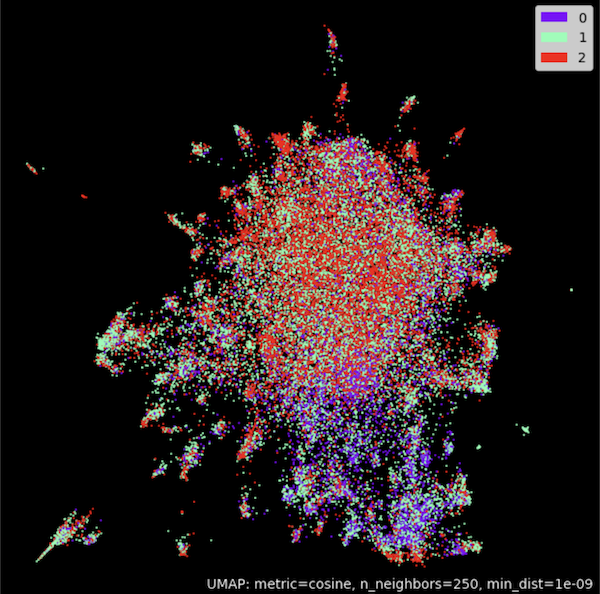

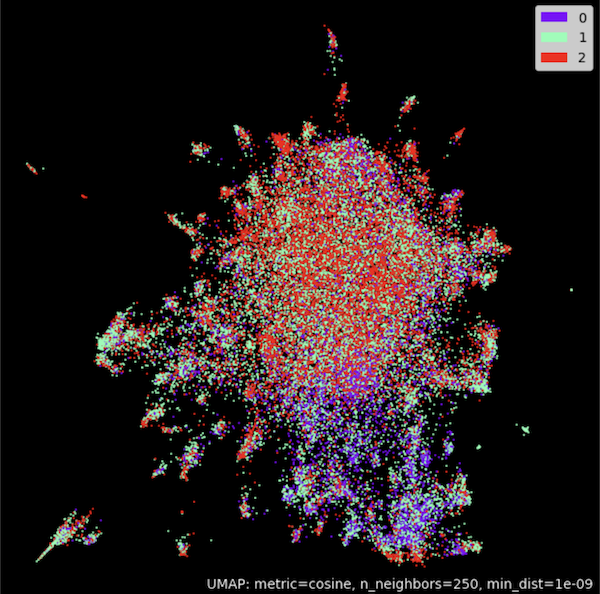

@@ -147,7 +147,7 @@ umap_plot.points(umap_model, labels = np.array(classes),theme='fire')

|

|

| 147 |

|

| 148 |

|

| 149 |

|

| 150 |

-

|

| 151 |

|

| 152 |

```python

|

| 153 |

from sentence_transformers import SentenceTransformer, util

|

|

|

|

| 22 |

The model was trained on a random collection of **English** sentences from Wikipedia: [Training data file](https://huggingface.co/datasets/princeton-nlp/datasets-for-simcse/resolve/main/wiki1m_for_simcse.txt)

|

| 23 |

|

| 24 |

# Model Usage

|

| 25 |

+

### Example 1) - Sentence Similarity

|

| 26 |

|

| 27 |

```python

|

| 28 |

from transformers import AutoTokenizer, AutoModel

|

|

|

|

| 69 |

print(f"Distance: {cos_sim[0,1].detach().item()}")

|

| 70 |

```

|

| 71 |

|

| 72 |

+

### Example 2) - Clustering

|

| 73 |

|

| 74 |

```python

|

| 75 |

from transformers import AutoTokenizer, AutoModel

|

|

|

|

| 147 |

|

| 148 |

|

| 149 |

|

| 150 |

+

### Example 3) - Using [SentenceTransformers](https://www.sbert.net/)

|

| 151 |

|

| 152 |

```python

|

| 153 |

from sentence_transformers import SentenceTransformer, util

|