CARROT-LLM-Routing

AI & ML interests

Cost AwaRe Rate Optimal rouTing

Recent Activity

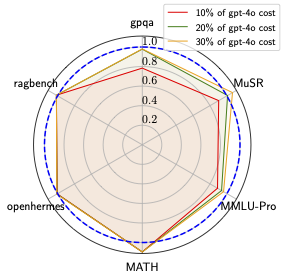

Welcome to CARROT-LLM-Routing! For a given trade off between performance and cost, CARROT makes it easy to pick the best model among a set of 13 LLMs for any query. Below you may read the CARROT paper, access code for CARROT, or see how to utilize CARROT out of the box for routing.

As is, CARROT supports routing to the collection of large language models provided in the table below. Instantiating the CarrotRouter class automatically loads the trained predictors for ouput token count and performance that are hosted in the CARROT-LLM-Router model repositories. Note that you must provide a hugging face token with access to the Llama-3 herd of models. To control the cost performance tradeoff please provide the router with an argument between 0 and 1 for mu. A smaller mu will prioritize performance. Happy routing!

| claude-3-5-sonnet-v1 | titan-text-premier-v1 | openai-gpt-4o | openai-gpt-4o-mini | granite-3-2b-instruct | granite-3-8b-instruct | llama-3-1-70b-instruct | llama-3-1-8b-instruct | llama-3-2-1b-instruct | llama-3-2-3b-instruct | llama-3-3-70b-instruct | mixtral-8x7b-instruct | llama-3-405b-instruct | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Input Token Cost ($ per 1M tokens) | 3 | 0.5 | 2.5 | 0.15 | 0.1 | 0.2 | 0.9 | 0.2 | 0.06 | 0.06 | 0.9 | 0.6 | 3.5 |

| Output Token Cost ($ per 1M tokens) | 15 | 1.5 | 10 | 0.6 | 0.1 | 0.2 | 0.9 | 0.2 | 0.06 | 0.06 | 0.9 | 0.6 | 3.5 |

Example: Using CARROT for Routing

## Download carrot.py

!git clone https://github.com/somerstep/CARROT.git

%cd CARROT-LLM-Router

from carrot import CarrotRouter

# Initialize the router

router = CarrotRouter(hf_token='YOUR_HF_TOKEN')

# Define a query

query = ["What is the value of i^i?"]

# Get the best model for cost-performance tradeoff

best_model = router.route(query, mu = 0.3)

print(f"Recommended Model: {best_model[0]}")